Comments by Brian Shilhavy

Editor, Health Impact News

作者: Brian Shilhavy 编辑,健康影响新闻

AI chat programs have become such a huge part of the online culture so quickly, that many people are still fooled by its infancy and limitations, even though the owners of these AI programs have been very clear to warn the public that the text they produce CANNOT be trusted since they often produce false information or even just make stuff up, if the data that is needed to produce a correct response is not available.

人工智能聊天程序迅速成为网络文化的一个重要组成部分,以至于很多人仍然被它的幼稚和局限所愚弄,尽管这些人工智能程序的所有者已经非常清楚地警告公众,他们产生的文本不可信,因为他们经常产生虚假信息,甚至只是编造东西,如果产生正确回复所需的数据不可用的话。

They refer to this false information as AI “hallucination.”

他们把这种错误的信息称为人工智能的“幻觉”

Two recent news stories demonstrate just how foolish and dangerous it is to use programs like ChatGPT for real world applications by trusting in the output that ChatGPT provides.

最近的两则新闻报道表明,通过相信 ChatGPT 提供的输出,将 ChatGPT 这样的程序用于真实世界的应用程序是多么愚蠢和危险。

Isaiah Poritz of Bloomberg Law reported this week that OpenAI, the company that produces ChatGPT, was hit with its first defamation lawsuit when it allegedly falsely accused a Georgia man of embezzling money.

彭博法律(Bloomberg Law)的以赛亚•波里茨(Isaiah Poritz)本周报道称,生产 ChatGPT 的 OpenAI 公司因涉嫌虚假指控一名佐治亚州男子挪用公款而受到第一起诽谤诉讼的打击。

OpenAI Hit With First Defamation Suit Over ChatGPT Hallucination

OpenAI 因 ChatGPT 幻觉而遭到第一起诽谤诉讼

OpenAI LLC is facing a defamation lawsuit from a Georgia radio host who claimed the viral artificial intelligence program ChatGPT generated a false legal complaint accusing him of embezzling money.

OpenAI 有限责任公司(OpenAI LLC)正面临佐治亚州一名电台主持人的诽谤诉讼,该主持人声称,病毒式的人工智能程序 ChatGPT 引发了一起虚假的法律诉讼,指控他挪用公款。

The first-of-its-kind case comes as generative AI programs face heightened scrutiny over their ability to spread misinformation and “hallucinate” false outputs, including fake legal precedent.

这是首例此类案件,因为生成性人工智能程序传播错误信息和“幻觉”错误输出(包括虚假法律先例)的能力受到了更严格的审查。

Mark Walters said in his Georgia state court suit that the chatbot provided the false complaint to Fred Riehl, the editor-in-chief of the gun publication AmmoLand, who was reporting on a real life legal case playing out in Washington state.

马克 · 沃尔特斯(Mark Walters)在佐治亚州法院的诉讼中表示,聊天机器人向枪支出版物 AmmoLand 的主编弗雷德 · 里尔(Fred Riehl)提供了虚假投诉。里尔当时正在报道一起在华盛顿州进行的现实生活中的法律案件。

Riehl asked ChatGPT to provide a summary of Second Amendment Foundation v. Ferguson, a case in Washington federal court accusing the state’s Attorney General Bob Ferguson of abusing his power by chilling the activities of the gun rights foundation.

Riehl 要求 ChatGPT 提供第二修正案基金会诉 Ferguson 一案的摘要,该案在华盛顿联邦法院指控该州司法部长 Bob Ferguson 滥用职权,冻结枪支权利基金会的活动。

However, ChatGPT allegedly provided a summary of the case to Riehl that said the Second Amendment Foundation’s founder Alan Gottlieb was suing Walters for “defrauding and embezzling funds” from the foundation as chief financial officer and treasurer.

然而,据称 ChatGPT 向里尔提供了一份案情摘要,称第二修正案基金会(Second Amendment Foundation)创始人艾伦•戈特利布(Alan Gottlieb)起诉沃尔特斯作为首席财务官和财务主管“欺诈和挪用”该基金会的资金。

“Every statement of fact in the summary pertaining to Walters is false,” according to the defamation suit, filed on June 5.

根据6月5日提起的诽谤诉讼,“摘要中关于沃尔特斯的所有事实陈述都是虚假的。”。

OpenAI didn’t immediately return a request for comment. (Full article.)

OpenAI 没有立即回复置评请求

In another recent report, an attorney was actually foolish enough to use ChatGPT to research court cases in an actual lawsuit, and it found bogus lawsuits that did not even exist, and this was filed in a New York court of law! The judge, understandably, was outraged.

在最近的另一份报告中,一个律师竟然愚蠢到用聊天软件来研究真正的诉讼中的法庭案件,而且它发现了根本不存在的虚假诉讼,这是在纽约法院提出的!可以理解,法官很生气。

Lawyer uses ChatGPT in court and now ‘greatly regrets’ it

律师在法庭上使用 ChatGPT,现在“非常后悔”

A New York attorney has been blasted for using ChatGPT for legal research as part of a lawsuit against a Columbian airline.

一名纽约律师因使用 ChatGPT 进行法律研究而受到抨击,这是针对一家哥伦比亚航空公司的诉讼的一部分。

Steven Schwartz, an attorney with the New York law firm Levidow, Levidow & Oberman, was hired by Robert Mata to pursue an injury claim against Avianca Airlines.

纽约律师事务所 Levidow,Levidow & Oberman 的律师史蒂文 · 施瓦茨(Steven Schwartz)受雇于罗伯特 · 马塔(Robert Mata) ,对阿维安卡航空公司(Avianca Airlines)提起伤害索赔。

Mata claims he sustained the injury from a serving cart during his flight with the airline in 2019, according to a May 28 report from CNN Business.

据美国有线电视新闻网商业频道5月28日报道,马塔声称他在2019年乘坐航空公司航班期间因服务车而受伤。

However, after a judge noticed inconsistencies and factual errors in the case documentation, Schwartz has admitted to using ChatGPT for his legal research, according to a May 24 sworn affidavit.

然而,根据5月24日的宣誓书,在一名法官注意到案件文件中的不一致和事实错误后,施瓦茨承认使用 ChatGPT 进行法律研究。

He claims that this was his first time using ChatGPT for legal research and “was unaware of the possibility that its content could be false.”

他声称这是他第一次使用 ChatGPT 进行法律研究,并且“没有意识到其内容可能是虚假的”

In an April 5 court filing, the judge presiding over the case stated:

在4月5日的一份法庭文件中,主审此案的法官表示:

“Six of the submitted cases appear to be bogus judicial decisions with bogus quotes and bogus internal citations.”

“在提交的案件中,有6起似乎是虚假的司法裁决,引用了虚假的内部引文。”

The judge further claimed that certain cases referenced in the submissions did not exist, and there was an instance where a docket number on a filing was mixed up with another court filing. (Full article.)

法官进一步声称,提交材料中提到的某些案件不存在,有一个案例中,一份文件的备审案件编号与另一份法院文件混在一起。(全文)

I came across an excellent article today by Aleksandar Svetski exposing the hype around Chat AI and detailing the real dangers to Chat AI, which he refers to as “The Great Homogenization,” where all the data on the Internet is controlled to a single narrative, something that I have been warning about as well.

今天,我偶然读到了 Aleksandar Svetski 写的一篇很棒的文章,文章揭露了对聊天人工智能的大肆宣传,并详细描述了聊天人工智能的真正危险,他称之为“伟大的同质化”,在这种情况下,互联网上的所有数据都被控制在一个单一的叙述中,这也是我一直在警告的事情。

This is a must-read article if you want to fully understand what is going on today with Chat AI, and how to fight back.

这是一篇必读的文章,如果你想充分理解今天聊天人工智能发生了什么,以及如何反击。

IF WE’RE NOT CAREFUL, THE AI REVOLUTION COULD BECOME THE ‘GREAT HOMOGENIZATION’

如果我们不小心,人工智能革命可能会成为“大同质化”

As artificial intelligence grows, so do attempts to control it. But, if we can differentiate the real risks from the fake risks, this technology could be used to encourage diversity of thought and ideas.

随着人工智能的发展,控制它的尝试也在增长。但是,如果我们能够区分真正的风险和虚假的风险,这项技术可以用来鼓励思想和观点的多样性。

by Aleksandar Svetski

Bitcoin Magazine

作者: Aleksandar Svetski 比特币杂志

Excerpts:

节选:

The world is changing before our very eyes. Artificial intelligence (AI) is a paradigm-shifting technological breakthrough, but probably not for the reasons you might think or imagine.

世界就在我们眼前改变。人工智能(AI)是一个范式转换的技术突破,但可能不是你想象或想象的原因。

You’ve probably heard something along the lines of, “Artificial general intelligence (AGI) is around the corner,” or, “Now that language is solved, the next step is conscious AI.”

你可能听过类似这样的话,“人工通用智能(AGI)即将到来,”或者,“既然语言已经解决了,下一步就是有意识的人工智能。”

Well… I’m here to tell you that those concepts are both red herrings. They are either the naive delusions of technologists who believe God is in the circuits, or the deliberate incitement of fear and hysteria by more malevolent people with ulterior motives.

我是来告诉你们这些概念都是转移注意力的东西。它们要么是技术专家的天真妄想,他们相信上帝就在电路中,要么是别有用心的更恶毒的人故意煽动恐惧和歇斯底里。

I do not think AGI is a threat or that we have an “AI safety problem,” or that we’re around the corner from some singularity with machines.

我不认为 AGI 是一个威胁,也不认为我们存在“人工智能安全问题”,或者我们即将面临机器的某种奇点。

But…

但是..。

I do believe this technological paradigm shift poses a significant threat to humanity — which is in fact, about the only thing I can somewhat agree on with the mainstream — but for completely different reasons.

我确实认为,这种技术范式的转变对人类构成了重大威胁ーー事实上,这是我唯一能在一定程度上同意主流观点的地方ーー但原因完全不同。

To learn what they are, let’s first try to understand what’s really happening here.

为了了解它们是什么,让我们首先试着理解这里到底发生了什么。

INTRODUCING… THE STOCHASTIC PARROT!

介绍... 随机鹦鹉!

Technology is an amplifier. It makes the good better, and the bad worse.

技术是一个放大器,它使好的变得更好,使坏的变得更坏。

Just as a hammer is technology that can be used to build a house or beat someone over the head, computers can be used to document ideas that change the world, or they can be used to operate central bank digital currencies (CDBCs) that enslave you into crazy, communist cat ladies working at the European Central Bank.

就像锤子是一种可以用来建房子或者打人头的技术一样,计算机可以用来记录改变世界的想法,或者它们可以用来操作央行的数字货币(CDBC) ,把你奴役成在欧洲央行工作的疯狂的共产主义猫女。

The same goes for AI. It is a tool. It is a technology. It is not a new lifeform, despite what the lonely nerds who are calling for progress to shut down so desperately want to believe.

人工智能也是如此。这是个工具。这是一项技术。这并不是一种新的生命形式,尽管那些孤独的书呆子们极力呼吁进步,但他们却不愿相信。

What makes generative AI so interesting is not that it is sentient, but that it’s the first time in our history that we are “speaking” or communicating with something other than a human being, in a coherent fashion. The closest we’ve been to that before this point has been with… parrots.

生成性人工智能之所以如此有趣,并不是因为它具有感知能力,而是因为这是人类历史上第一次我们以一种连贯的方式与人类以外的东西“说话”或进行交流。在此之前,我们最接近的就是... 鹦鹉。

Yes: parrots!

是的: 鹦鹉!

You can train a parrot to kind of talk and talk back, and you can kind of understand it, but because we know it’s not really a human and doesn’t really understand anything, we’re not so impressed.

你可以训练一只鹦鹉说话和回应,你可以理解它,但是因为我们知道它不是真正的人类,并且不能真正理解任何事情,所以我们没有那么印象深刻。

But generative AI… well, that’s a different story. We’ve been acquainted with it for six months now (in the mainstream) and we have no real idea how it works under the hood. We type some words, and it responds like that annoying, politically-correct, midwit nerd who you know from class… or your average Netflix show.

但是生成性人工智能... 那就另当别论了。我们已经熟悉它六个月了(在主流) ,我们不知道它是如何在引擎盖下工作的。我们输入一些单词,它的反应就像那个讨厌的,政治正确的,你在课堂上认识的书呆子... 或者一般的 Netflix 节目。

In fact, you’ve probably even spoken with someone like this during support calls to Booking.com, or any other service in which you’ve had to dial in or web chat. As such, you’re immediately shocked by the responses.

事实上,你甚至可能在 Booking.com 的支持电话中和这样的人交谈过,或者在任何其他你必须拨入或网络聊天的服务中。因此,你会立即被这些反应所震惊。

“Holy shit,” you tell yourself. “This thing speaks like a real person!”

“我靠,”你告诉自己,“这东西说话像个真人!”

The English is immaculate. No spelling mistakes. Sentences make sense. It is not only grammatically accurate, but semantically so, too.

英语是完美无瑕的。没有拼写错误。句子是有意义的。它不仅语法准确,而且语义也是如此。

Holy shit! It must be alive!

该死! 它一定是活的!

Little do you realize that you are speaking to a highly-sophisticated, stochastic parrot. As it turns out, language is a little more rules-based than what we all thought, and probability engines can actually do an excellent job of emulating intelligence through the frame or conduit of language.

你几乎没有意识到你正在和一只高度复杂的,随机的鹦鹉说话。事实证明,语言比我们想象的更加基于规则,而概率引擎实际上可以通过语言的框架或管道模拟智能。

The law of large numbers strikes again, and math achieves another victory!

大数定律再次发挥作用,数学获得了又一次胜利!

But… what does this mean? What the hell is my point?

但是... 这是什么意思? 我到底想说什么?

That this is not useful? That it’s proof it’s not a path to AGI?

证明这没用? 证明这不是通往 AGI 的道路?

Not necessarily, on both counts.

不一定,两方面都是。

There is lots of utility in such a tool. In fact, the greatest utility probably lies in its application as “MOT,” or “Midwit Obsolescence Technology.” Woke journalists and the countless “content creators” who have for years been talking a lot but saying nothing, are now like dinosaurs watching the comet incinerate everything around them. It’s a beautiful thing. Life wins again.

这样的工具有很多实用性。事实上,最大的实用性可能在于它作为“ MOT”的应用,或者“智障淘汰技术”被唤醒的记者和数不清的“内容创造者”多年来一直在大谈特谈,但什么也没说,现在他们就像恐龙一样看着彗星烧毁周围的一切。这是件美好的事情。生活又赢了。

Of course, these tools are also great for ideating, coding faster, doing some high-level learning, etc.

当然,这些工具对于思考、更快的编码、做一些高水平的学习等等也很有用。

But from an AGI and consciousness standpoint, who knows? There mayyyyyyyyyyy be a pathway there, but my spidey sense tells me we’re way off, so I’m not holding my breath. I think consciousness is so much more complex, and to think we’ve conjured it up with probability machines is some strange blend of ignorant, arrogant, naive and… well… empty.

但是从人工智能和意识的角度来看,谁知道呢?那里可能有一条路,但我的蜘蛛感觉告诉我,我们离得太远了,所以我没有屏住呼吸。我觉得意识要复杂得多想想我们用概率机器创造出来的意识是无知,傲慢,天真和... 好吧... 空虚的奇怪混合体。

So, what the hell is my problem and what’s the risk?

我到底有什么问题,有什么风险?

ENTER THE AGE OF THE LUI

进入吕宋时代

Remember what I said about tools.

记住我说的关于工具的话。

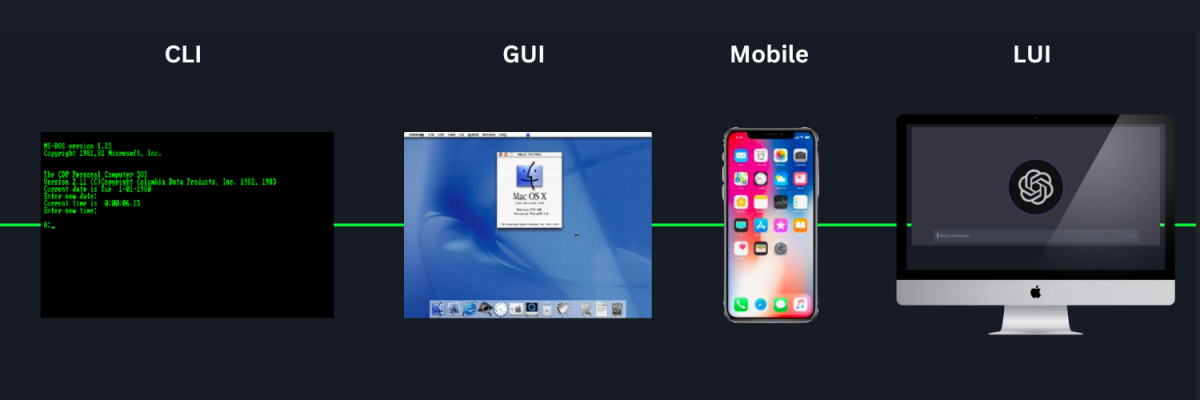

Computers are arguably the most powerful tool mankind has built. And computers have gone through the following evolution:

计算机可以说是人类制造的最强大的工具。计算机经历了以下进化:

- Punch cards

- 打孔卡

- Command line

- 命令行

- Graphical user interface, i.e., point and click

- 图形用户界面,即点击

- Mobile, i.e., thumbs and tapping

- 移动,也就是说,拇指和敲击

And now, we’re moving into the age of the LUI, or “Language User Interface.”

现在,我们正在进入一个“语言用户界面”的时代

This is the big paradigm shift. It’s not AGI, but LUI. Moving forward, every app we interact with will have a conversational interface, and we will no longer be limited by the bandwidth of how fast our fingers can tap on keys or screens.

这是一个重大的范式转变。不是 AGI,是 LUI。展望未来,我们使用的每一个应用程序都将有一个会话界面,我们将不再受限于手指敲击键盘或屏幕的速度。

Speaking “language” is orders of magnitude faster than typing and tapping. Thinking is probably another level higher, but I’m not putting any electrodes into my head anytime soon. In fact, LUIs probably obsolete the need for Neuralink-type tech because the risks associated with implanting chips into your brain will outweigh any marginal benefit over just speaking.

说“语言”的数量级比打字和敲击要快。思考可能是另一个更高的层次,但我不会把任何电极进入我的大脑很快。事实上,LUI 可能已经过时了对神经链接类技术的需求,因为将芯片植入大脑的风险将超过仅仅说话带来的边际效益。

In any case, this decade we will go from tapping on graphical user interfaces, to talking to our apps.

无论如何,这个十年我们将从利用图形用户界面发展到与我们的应用程序对话。

And therein lies the danger.

这就是危险所在。

In the same way Google today determines what we see in searches, and Twitter, Facebook, Tik Tok and Instagram all “feed us” through their feeds; generative AI will tomorrow determine the answers to every question we have.

同样,今天的谷歌决定了我们在搜索中看到什么,而推特(Twitter)、脸书(Facebook)、 Tik Tok 和 Instagram 都通过它们的 feed“向我们提供信息”,明天,生成性人工智能将决定我们所有问题的答案。

The screen not only becomes the lens through which you ingest everything about the world. The screen becomes your model of the world.

屏幕不仅仅是你摄取世界上所有东西的镜头。屏幕成为你的世界模型。

Mark Bisone wrote a fantastic article about this recently, which I urge you to read:

Mark Bisone 最近写了一篇关于这个问题的精彩文章,我强烈建议你读一读:

“The problem of ‘screens’ is actually a very old one. In many ways it goes back to Plato’s cave, and perhaps is so deeply embedded in the human condition that it precedes written languages. That’s because when we talk about a screen, we’re really talking about the transmission of an illusory model in an editorialized form.

“‘屏幕’问题实际上是一个非常古老的问题。在许多方面,它可以追溯到柏拉图的洞穴,也许是如此深深地嵌入在人类的条件,它先于书面语言。这是因为当我们谈论一个屏幕时,我们实际上是在谈论一个虚幻的模型以社论的形式传播。

“The trick works like this: You are presented with the image of a thing (and these days, with the sound of it), which its presenter either explicitly tells you or strongly implies is a window to the Real. The shadow and the form are the same, in other words, and the former is to be trusted as much as any fragment of reality that you can directly observe with your sensory organs.”

“这个技巧是这样的: 你被呈现在一个事物的形象(这些天,它的声音) ,它的呈现者要么明确地告诉你,要么强烈地暗示它是一扇通往真实的窗户。换句话说,影子和形体是一样的,前者和你可以用感官直接观察到的任何现实碎片一样值得信赖。”

And, for those thinking that “this won’t happen for a while,” well here are the bumbling fools making a good attempt at it.

而且,对于那些认为“这在一段时间内不会发生”的人来说,这里有一些笨拙的傻瓜,他们正在做出很好的尝试。

THE ‘GREAT HOMOGENIZATION’

“大同质化”

Imagine every question you ask, every image you request, every video you conjure up, every bit of data you seek, being returned in such a way that is deemed “safe,” “responsible” or “acceptable” by some faceless “safety police.”

想象一下,你问的每一个问题,你要求的每一张图片,你想到的每一段视频,你寻找的每一点数据,都会以一种被某些不知名的“安全警察”认为“安全”、“负责任”或“可接受”的方式返回

Imagine every bit of information you consume has been transformed into some lukewarm, middle version of the truth, that every opinion you ask for is not really an opinion or a viewpoint, but some inoffensive, apologetic response that doesn’t actually tell you anything (this is the benign, annoying version) or worse, is some ideology wrapped in a response so that everything you know becomes some variation of what the manufacturers of said “safe AI” want you to think and know.

想象一下,你消费的每一点信息都被转化成了某种不温不火的中间版本的真理,你所要求的每一个意见并不是真正的意见或观点,而是一些无害的、带有歉意的回应,实际上并没有告诉你任何东西(这是一个良性的、恼人的版本) ,或者更糟糕的是,这些回应包含了某种意识形态,所以你所知道的一切都变成了所谓“安全人工智能”制造商希望你思考。

Imagine you had modern Disney characters, like those clowns from “The Eternals” movie, as your ever-present intellectual assistants. It would make you “dumb squared.”

想象一下,你有现代迪斯尼人物,就像电影《永恒》中的小丑,作为你永远存在的智力助手。这会让你变成“哑巴方块”

“The UnCommunist Manifesto” outlined the utopian communist dream as the grand homogenization of man:

《非共产主义宣言》将乌托邦式的共产主义梦想概括为人类的伟大同质化:

If only everyone were a series of numbers on a spreadsheet, or automatons with the same opinion, it would be so much easier to have paradise on earth. You could ration out just enough for everyone, and then we’d be all equally miserable proletariats.

如果每个人都是电子表格上的一系列数字,或者拥有相同观点的自动机,那么拥有人间天堂就会容易得多。你可以给每个人分配足够的粮食,然后我们都会成为同样悲惨的无产阶级。

This is like George Orwell’s thought police crossed with “Inception,” because every question you had would be perfectly captured and monitored, and every response from the AI could incept an ideology in your mind. In fact, when you think about it, that’s what information does. It plants seeds in your mind.

这就像乔治 · 奥威尔的思想警察与“盗梦空间”的交叉,因为你的每一个问题都会被完美地捕捉和监控,而人工智能的每一个反应都可以在你的头脑中植入一种意识形态。事实上,当你思考的时候,信息就是这样的。它在你的脑海中播下种子。

This is why you need a diverse set of ideas in the minds of men! You want a flourishing rainforest in your mind, not some mono-crop field of wheat, with deteriorated soil, that is susceptible to weather and insects, and completely dependent on Monsanto (or Open AI or Pfizer) for its survival. You want your mind to flourish and for that you need idea-versity.

这就是为什么你需要在男人的头脑中有一套多样化的想法!你想要的是一片茂盛的热带雨林,而不是一片单一作物的小麦田,土壤退化,容易受到天气和昆虫的影响,完全依赖孟山都公司(或者开放人工智能公司或者辉瑞公司)的生存。你希望你的思想蓬勃发展,为此,你需要理念——大学。

This was the promise of the internet. A place where anyone can say anything. The internet has been a force for good, but it is under attack. Whether that’s been the de-anonymization of social profiles like those on Twitter and Facebook, and the creeping KYC across all sorts of online platforms, through to the algorithmic vomit that is spewed forth from the platforms themselves. We tasted that in all its glory from 2020. And it seems to be only getting worse.

这是互联网的承诺。一个任何人都可以畅所欲言的地方。互联网一直是一股向善的力量,但它正受到攻击。无论是像 Twitter 和 Facebook 这样的社交网站的去匿名化,还是各种各样的在线平台上蔓延的 KYC,甚至是从平台本身喷涌而出的算法呕吐物。我们品尝了2020年以来的所有荣耀。情况似乎只会越来越糟。

The push by WEF-like organizations to institute KYC for online identities, and tie it to a CBDC and your iris is one alternative, but it’s a bit overt and explicit. After the pushback on medical experimentation of late, such a move may be harder to pull off. An easier move could be to allow LUIs to take over (as they will, because they’re a superior user experience) and in the meantime create an “AI safety council” that will institute “safety” filters on all major large language models (LLMs).

类似世界经济论坛的组织推动建立 KYC 在线身份,并将其与 CBDC 和你的虹膜联系起来,这是一种选择,但它有点公开和明确。在最近对医学实验的抵制之后,这样的举措可能更难实现。更简单的做法可能是允许 LUI 接管(因为他们会,因为他们是一个卓越的用户体验) ,同时创建一个“人工智能安全委员会”,将在所有主要的大型语言模型(LLM)上建立“安全”过滤器。

Don’t believe me? Our G7 overlords are discussing it already.

不相信我? 我们的 G7霸主已经在讨论了。

Today, the web is still made up of webpages, and if you’re curious enough, you can find the deep, dark corners and crevices of dissidence. You can still surf the web. Mostly. But when everything becomes accessible only through these models, you’re not surfing anything anymore. You’re simply being given a synthesis of a response that has been run through all the necessary filters and censors.

今天,网络仍然是由网页组成的,如果你足够好奇,你可以找到深处,黑暗的角落和不同政见的裂缝。你仍然可以上网冲浪。差不多吧。但是,当所有东西都只能通过这些模型访问时,您就不再需要浏览任何东西了。你只是得到了一个经过所有必要的过滤和审查的反应的综合。

There will probably be a sprinkle of truth somewhere in there, but it will be wrapped up in so much “safety” that 99.9% of people won’t hear or know of it. The truth will become that which the model says it is.

这里面可能会有一些真相,但是它被包裹在如此多的“安全”之中,以至于99.9% 的人不会听到或者知道它。真相会变成模型所说的那样。

I’m not sure what happens to much of the internet when discoverability of information fundamentally transforms. I can imagine that, as most applications transition to some form of language interface, it’s going to be very hard to find things that the “portal” you’re using doesn’t deem safe or approved.

我不知道当信息的可发现性从根本上改变时,互联网会发生什么变化。我可以想象,随着大多数应用程序过渡到某种形式的语言接口,将很难找到您正在使用的“门户”认为不安全或不被批准的内容。

One could, of course, make the argument that in the same way you need the tenacity and curiosity to find the dissident crevices on the web, you’ll need to learn to prompt and hack your way into better answers on these platforms.

当然,有人可能会说,你需要坚韧不拔和好奇心,才能在网上找到不同政见者的缝隙,同样,你也需要学会在这些平台上提示和黑客技术,找到更好的答案。

And that may be true, but it seems to me that for each time you find something “unsafe,” the route shall be patched or blocked.

这也许是真的,但在我看来,每当你发现一些“不安全”的事情,这条路线就会被修补或阻塞。

You could then argue that “this could backfire on them, by diminishing the utility of the tool.”

然后,您可以争辩说,“这可能会适得其反,因为这会降低工具的效用。”

And once again, I would probably agree. In a free market, such stupidity would make way for better tools.

我可能会再次同意,在一个自由市场中,这种愚蠢会为更好的工具让路。

But of course, the free market is becoming a thing of the past. What we are seeing with these hysterical attempts to push for “safety” is that they are either knowingly or unknowingly paving the way for squashing possible alternatives.

当然,自由市场正在成为历史。我们所看到的这些歇斯底里的试图推动“安全”的做法,是他们要么是在有意识地,要么是在无意识地为压制可能的替代方案铺平道路。

In creating “safety” committees that “regulate” these platforms (read: regulate speech), new models that are not run through such “safety or toxicity filters” will not be available for consumer usage, or they may be made illegal, or hard to discover. How many people still use Tor? Or DuckDuckGo?

在创建“安全”委员会来“规范”这些平台(即: 规范言论)的过程中,没有经过这些“安全或毒性过滤器”的新模型将不能供消费者使用,或者它们可能被视为非法或难以发现。还有多少人在用 Tor?还是 DuckDuckGo?

And if you think this isn’t happening, here’s some information on the current toxicity filters that most LLMs already plug into. It’s only a matter of time before such filters become like KYC mandates on financial applications. A new compliance appendage, strapped onto language models like tits on a bull.

如果你认为这没有发生,这里有一些关于当前毒性过滤器的信息,大多数 LLM 已经插入。这种过滤器变得像 KYC 对金融应用程序的要求一样只是时间问题。一个新的服从附件,像公牛的奶子一样绑在语言模型上。

Whatever the counter-argument to this homogenization attempt, both actually support my point that we need to build alternatives, and we need to begin that process now.

无论反对这种同质化尝试的论点是什么,实际上都支持我的观点,即我们需要建立替代品,我们现在就需要开始这个过程。

For those who still tend to believe that AGI is around the corner and that LLMs are a significant step in that direction, by all means, you’re free to believe what you want, but that doesn’t negate the point of this essay.

对于那些仍然倾向于认为 AGI 即将到来,LLM 是朝着这个方向迈出的重要一步的人来说,无论如何,你可以自由地相信你想要的东西,但这并不否定本文的观点。

If language is the new “screen” and all the language we see or hear must be run through approved filters, the information we consume, the way we learn, the very thoughts we have, will all be narrowed into a very small Overton window.

如果语言是新的“屏幕”,我们看到或听到的所有语言都必须经过认可的过滤器,我们消费的信息,我们学习的方式,我们拥有的思想,都将缩小到一个很小的欧弗顿窗口。

I think that’s a massive risk for humanity.

我认为这对人类来说是个巨大的风险。

We’ve become dumb enough with social media algorithms serving us what the platforms think we should know. And when they wanted to turn on the hysteria, it was easy. Language user interfaces are social media times 100.

社交媒体算法为我们提供平台认为我们应该知道的东西,这让我们变得足够愚蠢。当他们想要激发歇斯底里时,很容易。语言用户界面是社交媒体的100倍。

Imagine what they can do with that, the next time a so-called “crisis” hits?

想象一下,下一次所谓的“危机”来袭时,他们能用这些钱做什么?

It won’t be pretty.

不会很好看的。

The marketplace of ideas is necessary to a healthy and functional society. That’s what I want.

对于一个健康和正常运转的社会来说,思想的市场是必要的,这就是我想要的。

Their narrowing of thought won’t work long term, because it’s anti-life. In the end, it will fail, just like every other attempt to bottle up truth and ignore it. But each attempt comes with unnecessary damage, pain, loss and catastrophe. That’s what I am trying to avoid and help ring the bell for.

他们狭隘的思想不会长期起作用,因为这是反生命的。最终,它会失败,就像其他所有试图掩盖真相和忽视真相的尝试一样。但每一次尝试都伴随着不必要的伤害、痛苦、损失和灾难。这就是我尽量避免和帮助按铃的原因。

WHAT TO DO ABOUT ALL THIS?

该怎么办?

If we’re not proactive here, this whole AI revolution could become the “great homogenization.” To avoid that, we have to do two main things:

如果我们不积极主动,整个人工智能革命可能会变成“伟大的同质化”为了避免这种情况,我们必须做两件主要的事情:

- Push back against the “AI safety” narratives: These might look like safety committees on the surface, but when you dig a little deeper, you realize they are speech and thought regulators.

反对“人工智能安全”的说法: 这些看起来可能像表面上的安全委员会,但是当你再深入一点,你就会意识到他们是言论和思想的监管者。

2.Build alternatives, now: Build many and open source them. The sooner we do this, and the sooner they can run more locally, the better chance we have to avoid a world in which everything trends toward homogenization.

现在就构建替代方案: 构建许多替代方案并开放源代码。我们越早这样做,它们就能越早在本地运行,我们就越有可能避免一个所有事情都趋向同质化的世界。

If we do this, we can have a world with real diversity — not the woke kind of bullshit. I mean diversity of thought, diversity of ideas, diversity of viewpoints and a true marketplace of ideas.

如果我们这样做,我们就可以拥有一个真正多样化的世界ーー而不是那种觉醒后的废话。我指的是思想的多样性,思想的多样性,观点的多样性,以及真正的思想市场。

An idea-versity. What the original promise of the internet was. And not bound by the low bandwidth of typing and tapping.

创意大学。互联网最初的承诺是什么。而且不受打字和轻敲带宽的限制。

来源:https://healthimpactnews.com/2023/openai-hit-with-first-defamation-suit-over-chatgpt-hallucination-exposing-the-real-dangers-of-ai/